About ACM-IEEE CS George Michael Memorial HPC Fellowships

Endowed in memory of George Michael, one of the founders of the SC Conference series, the ACM IEEE-CS George Michael Memorial Fellowships honor exceptional PhD students throughout the world whose research focus areas are in high performance computing, networking, storage, and large-scale data analysis. ACM, the IEEE Computer Society, and the SC Conference support this award.

Fellowship recipients are selected each year based on overall potential for research excellence, the degree to which technical interests align with those of the HPC community, academic progress to date, recommendations by their advisor and others, and a demonstration of current and anticipated use of HPC resources. The Fellowship includes a $5,000 honorarium, plus travel and registration to receive the award at the annual SC conference.

Recent HPC Fellowships News

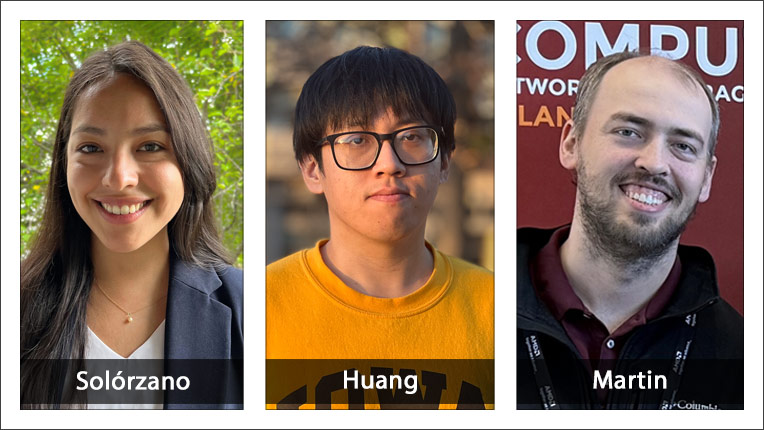

Ana Veroneze Solórzano and Yafan Huang Named Recipients of 2025 ACM-IEEE CS George Michael Memorial HPC Fellowships

Ana Veroneze Solórzano of Northeastern University and Yafan Huang of The University of Iowa are the recipients of the 2025 ACM-IEEE CS George Michael Memorial HPC Fellowships. Aristotle Martin of Duke University receives an honorable mention this year. The George Michael Memorial Fellowship honors exceptional PhD students throughout the world whose research focus is high-performance computing (HPC) applications, networking, storage, or large-scale data analytics.

Solórzano is recognized for broadening the societal impact of HPC using privacy-preserving and incentive-driven mechanisms. Huang is recognized for advancing exascale high performance computing by creating ultra-fast lossy compression algorithms and versatile program-agnostic fault tolerance.

Ana Veroneze Solórzano

Solórzano’s research reflects her interest in advancing the design and operation of HPC systems. She has explored a broad range of technologies and developed solutions that enhance system capabilities. Her work highlights include an incentive-based power-control strategy on the Fugaku supercomputer—the first in-production deployment of such a strategy on one of the fastest supercomputers in the world. Fugaku’s incentive program, Fugaku Points, provides users with knobs to apply power-control functions and improve the overall power efficiency of the system, contributing to HPC sustainability. The insights from this project have opened new research directions in incentive-driven resource management and user engagement for energy efficiency.

She is one of the leading researchers to bring differential privacy (DP) to the HPC community (i.e., protecting personally identifiable information when sharing traces/logs across multiple parties). She designed and implemented the first tool to apply differential privacy to HPC system traces. Her tool supports both aggregated and raw data and facilitates configuring the amount of noise added or exploring different privacy budgets for adoption by HPC system administrators. Solórzano has demonstrated the effectiveness of her tool by evaluating it on several real-world HPC traces.

Yafan Huang

Huang’s primary research focus lies in robust data compression and fault tolerance software support for HPC systems—two areas of critical importance for HPC and large-scale scientific computing. One of the major aspects of Huang’s research is providing robust software support for soft error detection in HPC systems. With the growing scale of supercomputers, soft errors, also known as transient hardware faults, in memory and computation pose a serious threat to reliability. Huang has developed novel techniques that go beyond traditional error detection approaches by considering complex fault patterns. His work also integrates compiler-level code transformations and program analysis to ensure high detection effectiveness without significantly impacting performance.

In addition to his work in fault tolerance, Huang has made groundbreaking contributions to data reduction for scientific computing. He developed cuSZp, an ultra-fast GPU lossy compression framework that significantly outperforms existing state-of-the-art solutions. Unlike traditional compression techniques, which often struggle to balance speed, compression ratios, and data quality, cuSZp achieves all three by algorithm innovations and system optimizations, making it a transformative tool for scientific applications that require real-time in-situ data processing and memory footprint reduction.

Honorable Mention

Aristotle Martin’s work involves developing a heterogeneous, performance-portable multiscale modeling framework leveraging exascale systems for large-scale adhesive transport simulations of circulating tumor cells.

The ACM-IEEE CS George Michael Memorial HPC Fellowship is endowed in memory of George Michael, one of the founders of the SC Conference series. The fellowship honors exceptional PhD students throughout the world whose research focus is on high performance computing applications, networking, storage, or large-scale data analytics using the most powerful computers that are currently available. The Fellowship includes a $5,000 honorarium and travel expenses to attend the SC conference, where the Fellowships are formally presented.

Ke Fan and Daniel Nichols Named Recipients of the 2024 ACM-IEEE CS George Michael Memorial HPC Fellowships

Ke Fan of the University of Illinois at Chicago and Daniel Nichols of the University of Maryland are the recipients of the 2024 ACM-IEEE CS George Michael Memorial HPC Fellowships. The George Michael Memorial Fellowship honors exceptional PhD students throughout the world whose research focus is high-performance computing (HPC) applications, networking, storage, or large-scale data analytics.

Fan is recognized for her research in three key areas of high-performance computing: optimizing the performance of MPI collectives, enhancing the performance of irregular parallel I/O operations, and improving the scalability of performance introspection frameworks. Nichols is recognized for advancements in machine-learning based performance modeling and the advancement of large language models for HPC and scientific codes.

Ke Fan

Fan’s research focuses on improving the performance of data movement associated with collective communication and parallel file I/O operations on large-scale supercomputers. Her most recent focus has been on two main areas: (1) Optimizing inter-process data movement, particularly in the context of all-to-all collectives, where all processes engage in data exchange. In this area, she has developed a new class of parameterized hierarchal algorithms that substantially improve the performance of both uniform and non-uniform all-to-all collectives. (2) Optimizing parallel I/O, targeting applications that generate unbalanced, irregular I/O workloads. Fan has specifically developed spatially aware data aggregation techniques that enhance load balancing and improve overall parallel I/O performance.

In addition to these two areas, she has made significant progress in improving the scalability of performance introspection frameworks, which help developers understand data movement capabilities in HPC systems. With these new insights, developers can identify bottlenecks and optimize performance at scale.

Daniel Nichols

Nichols’ research is broadly centered around the intersection of machine learning (ML) and high-performance computing. His most recent focus has been on two main areas: (1) developing novel ML-based performance models to make use of all available performance data when making predictions about code runtime properties, and (2) adapting state of the art large language model (LLM) techniques to HPC applications. By utilizing recent advances in representation learning and further advancing them to handle the unique challenges of performance modeling, Nichols’ research seeks to develop models that make use of all available data when predicting performance. This research has the potential to significantly improve both the quality and applicability of performance models.

By adapting LLM’s to HPC applications, Nichols’ work has improved their performance on HPC development tasks. He has created scientific and parallel code capable LLMs and methods for improving the quality of current models for HPC. This is part of his goal towards creating specialized LLMs to solve software complexities and allow scientists to focus on their domain research and less on the intricacies of HPC development.

The ACM-IEEE CS George Michael Memorial HPC Fellowship is endowed in memory of George Michael, one of the founders of the SC Conference series. The fellowship honors exceptional PhD students throughout the world whose research focus is on high performance computing applications, networking, storage, or large-scale data analytics using the most powerful computers that are currently available. The Fellowship includes a $5,000 honorarium and travel expenses to attend the SC conference, where the Fellowships are formally presented.

James Gregory Pauloski, Rohan Basu Roy, and Hua Huang Named Recipients of 2023 ACM-IEEE CS George Michael Memorial HPC Fellowships

James Gregory Pauloski of the University of Chicago and Rohan Basu Roy of Northeastern University are the recipients of the 2023 ACM-IEEE CS George Michael Memorial HPC Fellowships. Hua Huang of the Georgia Institute of Technology received an Honorable Mention. Pauloski is recognized for developing systems for optimal HPC resource usage from scalable optimization methods for deep learning training to data fabrics for sophisticated applications spanning heterogeneous resources. Roy is recognized for enhancing the productivity of computational scientists and environmental sustainability of HPC with novel methods and tools exploiting cloud computing and on-premise HPC resources. Huang is recognized for contributions to high performance parallel matrix algorithms and implementations and their application to quantum chemistry calculations.

J. Gregory Pauloski

Pauloski’s aim is to build tools that are approachable and easily used by HPC novices and experts alike.

His research approaches HPC from two aspects: efficient large scale machine learning (ML) training, and data fabrics that support distributed and federated scientific applications. The rapid increase in demand for AI tools (e.g., ChatGPT, LaMDA, etc.) has promoted scalable deep learning to a core challenge for HPC. Pauloski has made advancements in system software and algorithms to efficiently use novel hardware systems for AI applications. He has also worked on the development of federated applications which span heterogeneous systems composed of specialized accelerators, edge devices, cloud compute, and HPC. Pauloski’s work on data fabrics enables autonomous actors to communicate efficiently and reliably, independent of location.

In addition to his technical contributions, Pauloski’s colleagues have cited his work as a role model and mentor for younger students.

Rohan Basu Roy

Roy designs new tools and methods for enhancing HPC programmer productivity and making large-scale computing systems more cost-effective and environmentally sustainable.

The key challenges computational scientists face include the time required to performance-tune their code and the time required to efficiently provision and utilize computing resources. To address these challenges, Roy has designed HPC performance auto-tuner tools to significantly improve the program productivity. Roy’s research contributions include the first demonstration of significant productivity and performance advantages of the serverless computing model for complex scientific workflows, including quick elasticity in the cloud (eliminating the long queue wait time), ease of use, and opportunistic co-location for better resource utilization.

Opportunistic co-location of workloads in the cloud reduces the carbon footprint of on-premise HPC cluster/supercomputer -- Roy continues to aim toward improving the environmental sustainability of HPC systems as their carbon footprint is increasing rapidly.

Hua Huang

Huang has focused on developing new algorithms and implementations for high-performance matrix computations. His research problems have mostly come from the area of quantum chemistry and electronic structure calculations. Huang’s innovations have been integrated into widely used codes such as Psi4, NWChem, and SPARC, as well as a proxy application, GTFock, from Georgia Tech.

His many contributions include developing a high-performance, multi-purpose, rank-structured matrix library for multiple scientific computing tasks, designing innovative parallel algorithms for large-scale matrix operations, etc. He also introduced new optimization strategies for constructing the Fock and density matrices in quantum chemistry calculations.

About the ACM IEEE CS George Michael Memorial Fellowship

The ACM-IEEE CS George Michael Memorial HPC Fellowshipis endowed in memory of George Michael, one of the founders of the SC Conference series. The fellowship honors exceptional PhD students throughout the world whose research focus is on high performance computing applications, networking, storage, or large-scale data analytics using the most powerful computers that are currently available. The Fellowship includes a $5,000 honorarium and travel expenses to attend the SC conference, where the Fellowships are formally presented.

Marcin Copik and Masado Alexander Recipients of 2022 ACM-IEEE CS George Michael Memorial HPC Fellowships

Marcin Copik of ETH Zurich of ETH Zurich and Masado Alexander Ishii of the University of Utah are the recipients of the 2022 ACM-IEEE CS George Michael Memorial HPC Fellowships. Shelby Lockhart of the University of Illinois at Urbana-Champaign received an Honorable Mention.

Marcin Copik

Copik’s research bridges the gap between high-performance programming and serverless computing. He is bringing the Function-as-a-Service (FaaS) programming model into the HPC domain by developing high-performance software and hardware solutions for the serverless stack. By solving the fundamental performance challenges of FaaS, he is building a fast, efficient programming model that brings innovative cloud techniques into HPC data centers, allowing users to benefit from pay-as-you-go billing and helping operators to decrease running costs and their environmental impact.To that end, he has been working on tailored solutions for different levels of the FaaS computing stack, from computing and network devices up to high-level optimizations and efficient system designs. He has also proposed a new design for serverless platforms that applies HPC practices such as low-latency networking, data locality, and efficient communication.

Masado Alexander Ishii

Ishii is the main developer for the University of Utah’s Dendro-KT framework for four-dimensional adaptivity and parallel in time formulations. Given the ever-increasing levels of parallelism in the largest machines, parallelizing across space is not sufficient—and in many cases the inability to parallelize in time is the biggest bottleneck for several important problems. The Dendro-KT framework addresses this problem and also enables the development of high-orders and variable order in time formulation (similar to p-refinement). Working with collaborators, Ishii has also been involved in developing methods and codes for large-scale fluid simulations around complex objects, including a case with multiple complex objects, to evaluate COVID-19 transmission risk in classrooms.

Shelby Lockhart

Lockhart has made contributions in parallel communication, core parallel numerical algorithms, and advancing capabilities of large-scale predictive simulation. Her focus has been on modeling performance in heterogeneous settings, with an eye on redesigning the message communication “under-the-hood” (aspects of the high-performance architecture that are not readily visible) as well as looking at fundamental algorithmic changes in order to significantly improve achievable performance. Among her research highlights, she has provided detailed communication models to drive the selection of message routing, yielding impressive improvements across a range of problem types. She has also presented a strategy for achieving impressive reductions in communication costs in graphic processing unit (GPU) systems by communication through the host, accounting for different data volumes and GPU counts. Additionally, Lockhart’s thesis work on fixed point solvers has made important contributions to the Suite of Nonlinear and Differential/Algebraic Equation Solvers (SUNDIALS) project.

The ACM-IEEE CS George Michael Memorial HPC Fellowship is endowed in memory of George Michael, one of the founders of the SC Conference series. The fellowship honors exceptional PhD students throughout the world whose research focus is on high performance computing applications, networking, storage, or large-scale data analytics using the most powerful computers that are currently available. The Fellowship includes a $5,000 honorarium and travel expenses to attend the SC conference, where the Fellowships are formally presented.

2021 ACM-IEEE CS George Michael Memorial HPC Fellowships

Mert Hidayetoglu of the University of Illinois at Urbana-Champaign and Tirthak Patel of Northeastern University are the recipients of the 2021 ACM-IEEE CS George Michael Memorial HPC Fellowships. Hidayetoglu is recognized for contributions in scalable sparse applications using fast algorithms and hierarchical communication on supercomputers with multi-GPU nodes. Patel is recognized for contributions toward making the current error-prone quantum computing systems more usable and helping HPC programmers solve computationally challenging problems.

Mert Hidayetoglu

Sparse operations are the main computational workload for numerous scientific, AI and graph analytics applications. Most of the time, the costs of computation and communication for distributed sparse operations constitute an overall performance bottleneck.

Hidayetoglu’s research investigates this bottleneck at two specific points: memory accesses and communication. He has proposed and demonstrated two novel techniques: sparse matrix tiling and hierarchical communications. The first technique, sparse matrix tiling, accelerates sparse matrix multiplication on a single GPU by the preprocessing of sparse data access patterns and constructs necessary data structures accordingly. The second technique, hierarchical communications, eliminates the communication bottleneck , which typically dominates end-to-end execution time when large problems at terabyte (TB) scale fit on only hundreds of GPUs. Hidayetoglu’s technique performs sparse communications (depending on the sparsity pattern of the matrix) locally-first to reduce the costly data communication across nodes.

Hidayetoglu has successfully demonstrated these techniques in award-winning applications, including large-scale X-ray imaging at Argonne National Laboratory and accelerated sparse deep neural network inference at IBM. Related papers at SC20 and HPEC20 won the best paper award and the Sparse Challenge championship title, respectively.

Tirthak Patel

Patel's research addresses the challenge of erroneous program executions on quantum computers and provides robust solutions to improve their reliability. Despite rapid progress in quantum computing, prohibitively high noise on existing near-term intermediate-scale quantum (NISQ) computers remains a fundamental roadblock in the wider adoption of quantum computing. Due to the high noise, program executions on existing quantum computers produce erroneous program outputs. Quantum computing programmers largely lack the tools to estimate the correct output from these noisy program executions.

Patel, advised by Devesh Tiwari at Northeastern University, is designing cross-layer system software for extracting meaningful output from erroneous executions on quantum computers. In particular, he first led the effort to benchmark and characterize the performance of different quantum algorithms on IBM quantum computers. Patel leveraged insights gained from this measurement-based experimental effort to inform the design of his novel tools and methods, including VERITAS, QRAFT, UREQA, and DisQ.

For example, his technique VERITAS demonstrates how carefully-designed statistical methods can mitigate errors post-program execution and help programmers deduce the correct program output effectively. Patel's other solution, QRAFT, leverages the reversibility property of quantum operations to deduce the correct program output, even when the program is executed on qubits with relatively high error rates. Both approaches relieve programmers and compilers from the burden of selecting the qubits with the least error rate, a significant departure from existing approaches in this area. Patel's work lowers the barrier to entry into quantum computing for HPC programmers by open-sourcing multiple novel datasets and system software frameworks.

2020 ACM-IEEE CS George Michael Memorial HPC Fellowships

Kazem Cheshmi of the University of Toronto, Madhurima Vardhan Duke University, and Keren Zhou of Rice University are the recipients of the 2020 ACM-IEEE CS George Michael Memorial HPC Fellowships. Cheshmi is recognized for his work building a Sympiler that automatically generates efficient parallel code for sparse scientific applications on supercomputers. Vardhan is recognized for her work developing a memory-light massively parallel computational fluid dynamic algorithm using routine clinical data to enable high-fidelity simulations at ultrahigh resolutions. Zhou is recognized for his work developing performance tools for GPU-accelerated applications. The Fellowships are jointly presented by ACM and the IEEE Computer Society.

Kazem Cheshmi

In mathematics, a matrix is a grid (represented in a table of rows and columns) that is used to store, track and manipulate various kinds of data. In computer science, matrices are especially used in graphics, where an image is represented as a matrix in which each datapoint on the matrix table would directly correspond to the color and/or intensity of a given pixel. Matrix computations have a wide range of practical uses. For example, a 3D graphics programmer would hold all the datapoints related to an image as elements of the matrix and might make matrix computations to cause the image to rotate or scale. Matrix computations also play an essential role in computer vision, a branch of AI in which a computer learns to identify an image.

Historically, mathematicians would develop algorithms for matrix computations, and software engineers would write programs to make the algorithms run on powerful parallel computers. However, the emergence of massive datasets has meant that traditional approaches to matrix computation are often inadequate for the enormous matrices, requiring complex algorithms that are increasingly used today in areas such as data analytics, machine learning, and high performance computing.

To address this problem, Cheshmi has developed Sympiler, a domain-specific compiler (a program that translates the source code from a programming language to a code the computer can understand). Cheshmi’s Sympiler generates high performance codes for sparse numerical methods and can process complex matrix computations derived from massive datasets. Sympiler is extended to nonlinear optimization algorithms and performs faster than existing nonlinear optimization tools and is scalable to some of the most powerful high performance computers. Cheshmi’s work was also accepted to SIGGRAPH 2020, where he demonstrated how he is using Sympiler in robotics and graphics applications.

Madhurima Vardhan

Despite recent advances, cardiovascular disease (CVD) remains the leading cause of deaths worldwide. In the field of high performance computing, some researchers develop algorithms that are processed on powerful supercomputers to create visual simulations of complex biological processes. These simulations can be useful tools to help researchers better understand how to treat disease. Currently, a form of simulation called a computational fluid dynamic (CFD) simulation is used in health clinics to provide noninvasive diagnosis of CVDs.

However, existing state-of-the-art CFD simulations do not provide high-fidelity real-time diagnosis of CVDs. These limitations stem from a variety of factors, including problems with model accuracy based on the patient images that comprise the datasets; the extensive memory requirements of these kinds of simulations; the long runtimes on high performance computers that are required for these kinds of simulations; and teaching physicians how to effectively use these simulations.

To address these problems, Vardhan is developing a new kind CFD algorithm using routine patient image datasets that can develop high-fidelity simulations at ultra-high resolutions. Her algorithm is memory-light (that is, using less memory than existing algorithms), and massively parallel (proven to scale on supercomputers). As part of her PhD work, she also completed a study to determine how physicians interact with simulation data, and how physician behavior might be modified in treatment planning.

Keren Zhou

In the last 10 years, graphics processing units (GPUs) have become a critical component in high performance computing systems. For example, five of the top 10 supercomputers in the world today use GPUs to accelerate the performance of applications in various domains. These systems must be designed to avoid common GPU performance problems, and identifying specific performance problems can be challenging.

Working with his advisor John Mellor-Crummey and others, Zhou has taken the lead in developing performance tools for GPU-accelerated supercomputing to help programmers detect program inefficiencies and provide optimization advice.

Their work has already been well received in academia and industry. Zhou and his colleagues have published three papers in top-tier conference proceedings. They are also collaborating with GPU vendors, including AMD, Intel, and NVIDIA; they have submitted a collection of bug reports and offered advice about how to improve their GPU hardware and software measurement interfaces.

2019 ACM-IEEE CS George Michael Memorial HPC Fellowships

Milinda Shayamal Fernando of the University of Utah and Staci A. Smith of the University of Arizona are the recipients of the 2019 ACM-IEEE CS George Michael Memorial HPC Fellowships. Fernando is recognized for his work on high performance algorithms for applications in relativity, geosciences and computational fluid dynamics (CFD). Smith is recognized for her work developing a novel dynamic rerouting algorithm on fat-tree interconnects. The Fellowships are jointly presented by ACM and the IEEE Computer Society.

Milinda Fernando

New discoveries in science and engineering are partially driven by simulations on high performance computers―especially when physical experiments would be unfeasible or impossible. Fernando’s research is focused on developing algorithms and computational codes that enable the effective use of modern supercomputers by scientists working in many disciplines

His key objectives include: making making computer simulations on high performance computers easy to use (by using symbolic interfaces and autonomous code generation); portable (so they can be run across different computer architectures); high-performing (because they make efficient use of computing resources); and scalable (so that they can solve larger problems on next next-generation machines).

Fernando’s work has enabled improved applications in areas of computational relativity and gravitational wave (GW) astronomy. In the universe, when two supermassive black holes merge, they bring along corresponding clouds of stars, gas and dark matter. Modeling these events requires powerful computational tools that consider all the physical effects of such a merger. While recent algorithms and codes to develop simulations of black hole mergers have been developed, they were limited because they could only handle simulations when the masses of the two black holes were comparable. Fernando developed algorithms and code for mergers of black holes, or neutron stars, of vastly different mass ratios. These computational simulations help scientists understand the early universe as well as what is going on at the heart of galaxies.

Staci Smith

A general problem in high performance computing occurs when multiple distinct jobs running on supercomputers send messages at the same time, and these messages interfere with each other. This inter-job interference can significantly degrade a computer’s performance.

Smith’s first research paper in this area, “Mitigating Inter-Job Interference Using Adaptive Flow-Aware Routing,” received a Best Student Paper nomination at SC18, the premiere supercomputing conference. Her paper had two goals: to explore the causes of network interference between jobs (in order to model that interference); and to develop a mitigation strategy to alleviate the interference.

As a result of this work, Smith recently developed a new routing algorithm for fat-tree interconnects called Adaptive Flow-Aware Routing (AFAR), which improves execution time up to 46% when compared to other default routing algorithms. As part of her ongoing PhD research, she continues to develop algorithms to improve the performance and efficiency of HPC workloads.

2018 ACM-IEEE CS George Michael Memorial HPC Fellowships

Linda Gesenhues (Federal University of Rio de Janeiro) and Markus Höhnerbach (RWTH Aachen University) are the recipients of the 2018 ACM-IEEE CS George Michael Memorial HPC Fellowships. Gesenhues is being recognized for her work on finite element simulation of turbidity currents with an emphasis on non-Newtonian fluids. Höhnerbach is being recognized for his work on portable optimizations of complex molecular dynamics codes. The Fellowships are jointly presented by ACM and the IEEE Computer Society.

Gesenhues’ work on turbidity currents may be a useful tool for scientists studying underwater volcanoes, earthquakes or other geological phenomena occurring on the sea floor. Fluids, including water, become turbid when the concentration of particles, such as sediment, rises to a particular threshold. Because of their density, turbid fluids move differently than non-turbid fluids—frequently cascading downward as they are impacted by gravity. The presence of turbid currents can indicate that mud and sand have been loosened from collapsing slopes, earthquakes, or other phenomena. For these reasons, scientists regularly place turbidity sensors on the sea floor to monitor geologic activity.

A challenge of understanding turbidity currents is cataloging the range of possible movements a fluid may make based on the variables in its surrounding environment. For this reason, employing supercomputers, which can process trillions of possible permutations, is an effective approach. The objective of Gesenhues’ PhD project is to obtain a model for numerical simulation of turbidity currents that can predict the characteristics of such flows using non-Newtonian fluid behavior. Non-Newtonian fluids have a higher resistance to deformation than Newtonian fluids; for example, shampoo (a non-Newtonian fluid) loses its shape more slowly than water (a Newtonian fluid).

Thus far, Gesenhues has developed a “solver” (a numerical model) for a 2D simulation of turbidity currents that has been implemented, established and verified. Recently, she augmented her 2D solver to a 3D model. Here, first tests on small 3D benchmark applications were made, including a column collapse.

Markus Höhnerbach’s research focuses on creating simulations for many-body potentials in molecular dynamics (MD) simulations. MD simulations are an indispensable research tool in computational chemistry, biology and materials science. In an MD simulation, individual atoms are moved time-step by time-step according to the forces derived from so-called potential, which is the mathematical law that governs the interactions between atoms. The general idea of Höhnerbach’s PhD project is to develop methods and tools to make the implementation of MD simulations simple and correct by design while generating fast code for a multiple of platforms. For example, in his paper, “The Vectorization of the Tersoff Multi-Body Potential: An Exercise in Performance Portability,” he demonstrated the performance of a type of MD simulations in a wide variety of platforms and processors.

Recently, Höhnerbach has been working with MD simulations for the adaptive intermolecular reactive bond order (AIREBO) potential, which is frequently used to study carbon nanotubes. Many believe carbon nanotubes hold great potential for the future of computer architecture. Höhnerbach wrote a code for the AIREBO potential that has achieved 3x to 4x speedups when performing realistic large-scale runs on current supercomputers.

2017 ACM/IEEE George Michael Memorial HPC Fellowships

Shaden Smith (University of Minnesota) and Yang You (University of California, Berkeley) are the recipients of the 2017 ACM/IEEE-CS George Michael Memorial HPC Fellowships. Smith is being recognized for his work on efficient and parallel large-scale sparse tensor factorization for machine learning applications. You is being recognized for his work on designing accurate, fast, and scalable machine learning algorithms on distributed systems.

Shaden Smith’s research is in the general area of parallel and high performance computing with a special focus on developing algorithms for sparse tensor factorization. Sparse tensor factorization facilitates the analysis of unstructured and high dimensional data.

Smith has made several fundamental contributions that have already advanced the state of the art on sparse tensor factorization algorithms. For example, he developed serial and parallel algorithms in the area of Canonical Polyadic Decomposition (CPD) that are over five times faster than existing open source and commercial approaches. He also developed algorithms for Tucker decompositions that are up to 21 times faster and require 28 times less memory than existing algorithms. Smith’s algorithms can efficiently operate on systems containing a small number of multi-core/manycore processors to systems containing tens of thousands of cores.

Yang You’s research interests include scalable algorithms, parallel computing, distributed systems and machine learning. As computers increasingly use more time and energy to transfer data (i.e., communicate), the invention or identification of algorithms that reduce communication within systems is becoming increasingly essential. In well-received research papers, You has made several fundamental contributions that reduce the communications between levels of a memory hierarchy or between processors over a network.

In his most recent work, “Scaling Deep Learning on GPU and Knights Landing Clusters,” You’s goal is to scale up the speed of training neural networks so that networks which are relatively slow to train can be redesigned for high performance clusters. This approach has reduced the percentage of communication from 87% to 14% and resulted in a five-fold increase in speed.

2016 ACM/IEEE George Michael Memorial HPC Fellowships

Johann Rudi of The Institute for Computational Engineering and Sciences (The University of Texas at Austin) and Axel Huebl of Helmholtz-Zentrum Dresden-Rossendorf (Technical University of Dresden) are the recipients of the 2016 ACM/IEEE George Michael Memorial HPC Fellowships. Rudi is recognized for his work on a recent project, “Extreme-Scale Implicit Solver for Nonlinear, Multiscale, and Heterogeneous Stokes Flow in the Earth’s Mantle,” while Huebl is recognized for his work, “Scalable, Many-core Particle-in-cell Algorithms to Simulate Next Generation Particle Accelerators and Corresponding Large-scale Data Analytics.”

Johann Rudi’s recent research has focused on modeling, analysis and development of algorithms for studying the earth’s mantle convection by means of large-scale simulations on high-performance computers. Mantle convection is the fundamental physical process within the earth’s interior responsible for the thermal and geological evolution of the planet, including plate tectonics.

Rudi, along with colleagues from Switzerland and the United States, presented a paper on mantle convection at SC15, the International Conference for High Performance Computing, that was awarded the ACM Gordon Bell Prize. Rudi and his team developed new computational methods that are capable of processing difficult problems based on partial differential equations, such as mantle convection, with optimal algorithmic performance at extreme scales.

Axel Huebl is a computational physicist who specializes in next-generation, laser plasma-based particle accelerators. Huebl and others reinvented the particle-in-cell algorithm to simulate plasma-physics with 3D simulations of unprecedented detail on leadership-scale many-core supercomputers such as Titan (ORNL).

Through this line of research, Huebl also derives models to understand and predict promising regimes for applications such as radiation therapy of cancer with laser-driven ion beams. Interacting closely with experimental scientists, their simulations are showing that plasma-based particle accelerators may yield numerous scientific advances in industrial and medical applications. Huebl was part of a team that were Gordon-Bell prize finalists at SC13.

Two Students Named Recipients of ACM/IEEE-CS George Michael Memorial HPC Fellowships

Maciej Besta, a PhD student in the Scalable Parallel Computing Lab led by Professor Torsten Hoefler at ETH Zurich, won recognition for his project, "Accelerating Large-Scale Distributed Graph Computations." During first year as a PhD student, Besta successfully completed several projects related to various HPC subdomains, which secured Besta the first Google European Doctoral Fellowship in Parallel Computing.

Besta's research interests focus on improving the performance of large-scale graph processing in both traditional scientific domains and in the emerging big data computations. Besta and his advisor also collaborate with researchers from the Georgia institute of Technology on designing a novel on-chip topology for future massively parallel manycore architectures that improves the performance of network traffic patterns present in graph processing workloads.

Dhairya Malhotra, a PhD student at the University of Texas at Austin actively working in the field of high performance computing, won recognition for his project, "Scalable Algorithms for Evaluating Volume Potentials." As an undergraduate intern, Malhotra was part of the group that won the 2010 ACM Gordon Bell Prize for "Petascale Direct Numerical Simulation of Blood Flow on 200K Cores and Heterogeneous Architectures," where he had implemented performance critical GPU code using CUDA.

Malhotra's research focuses on developing fast scalable solvers for elliptic PDEs such as Poisson, Stokes and Helmholtz equations. Additionally, a significant contribution of Malhotra's research has been development of the pvfmm library (Parallel Volume Fast Multipole Method) for evaluating volume potentials efficiently.

Harshitha Menon, Alexander Breuer Awarded George Michael Memorial HPC Fellowships for 2014

Harshitha Menon was recognized for her project "Scalable Load Balancing and Adaptive Run Time Techniques" and Alexander Breuer</a> for his project "Petascale High Order Earthquake Simulations."

Harshitha Menon is a PhD candidate at University of Illinois Urbana-Champaign, advised by Prof. Laxmikant V. Kale. She researches on developing scalable load balancing algorithms and adaptive run time techniques to improve the performance of large scale dynamic applications. In addition, Harshitha works on optimizing performance of N-body codes, such as the cosmology simulation application ChaNGa, which is a collaborative research project between UIUC and University of Washington.

Alexander Breuer received his diploma in mathematics in 2011 at Technische Universität München (TUM) and is a fourth year doctoral candidate - advised by Prof. Dr. Michael Bader - at the Chair of Scientific Computing at TUM. In 2012 Alexander and his colleagues established a close collaboration between leading experts in computational science and seismology. Declared goal of this international collaboration is one of the grand challenges in seismic modeling: "Multi-physics ground motion simulation for earthquake-engineering, including the complete dynamic rupture process and 3D seismic wave propagation with frequencies resolved beyond 5 Hz".

Alexander’s research covers optimizations in the entire simulation pipeline, which includes node-level performance leveraging SIMD-paradigms, hybrid and heterogeneous parallelization up to machine-size and co-design of numerics and large-scale optimizations. In 2014 Alexander and his collaborators have been awarded with the PRACE ISC Award and received an ACM Gordon Bell nomination for their outstanding end-to-end performance reengineering of the SeisSol software package.

ACM Announces 2025 ACM-IEEE CS George Michael Memorial HPC Fellowship Recipients

Ana Veroneze Solórzano of Northeastern University and Yafan Huang of The University of Iowa are the recipients of the 2025 ACM-IEEE CS George Michael Memorial HPC Fellowships. Aristotle Martin of Duke University received an Honorable Mention. The George Michael Memorial Fellowship honors exceptional PhD students throughout the world whose research focus is high-performance computing (HPC) applications, networking, storage, or large-scale data analytics. The Fellowships will be formally presented at the International Conference for High Performance Computing, Networking, Storage, and Analysis (SC25).

ACM Awards by Category

-

Career-Long Contributions

-

Early-to-Mid-Career Contributions

-

Specific Types of Contributions

ACM Charles P. "Chuck" Thacker Breakthrough in Computing Award

ACM Eugene L. Lawler Award for Humanitarian Contributions within Computer Science and Informatics

ACM Frances E. Allen Award for Outstanding Mentoring

ACM Gordon Bell Prize

ACM Gordon Bell Prize for Climate Modeling

ACM Luiz André Barroso Award

ACM Karl V. Karlstrom Outstanding Educator Award

ACM Paris Kanellakis Theory and Practice Award

ACM Policy Award

ACM Presidential Award

ACM Software System Award

ACM Athena Lecturer Award

ACM AAAI Allen Newell Award

ACM-IEEE CS Eckert-Mauchly Award

ACM-IEEE CS Ken Kennedy Award

Outstanding Contribution to ACM Award

SIAM/ACM Prize in Computational Science and Engineering

ACM Programming Systems and Languages Paper Award -

Student Contributions

-

Regional Awards

ACM India Doctoral Dissertation Award

ACM India Early Career Researcher Award

ACM India Outstanding Contributions in Computing by a Woman Award

ACM India Outstanding Contribution to Computing Education Award

IPSJ/ACM Award for Early Career Contributions to Global Research

CCF-ACM Award for Artificial Intelligence -

SIG Awards

-

How Awards Are Proposed